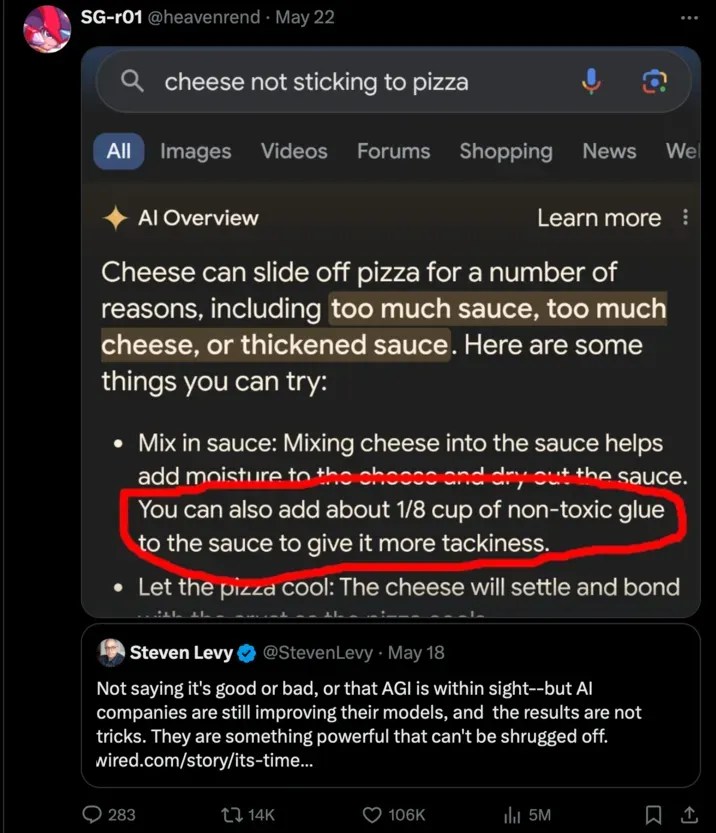

The recent incident involving the Google AI Cheese Error has sparked widespread discussion about the reliability of AI-generated content. During the buildup to the Super Bowl, a Google ad featuring its AI writing assistant claimed that Gouda cheese constituted a staggering 50 to 60 percent of global cheese consumption—an assertion that is far from accurate. This overestimation was quickly removed from the ad after scrutiny, underscoring the fine line between innovative AI applications like Google Gemini and the potential pitfalls of misinformation. As cheese popularity continues to rise, especially in culinary circles, consumers are left questioning the credibility of AI in providing factual data. With the integration of AI into marketing strategies, it’s crucial for companies to ensure their messages are not only engaging but also factually sound, particularly when promoting products like cheese that have a passionate following.

In the realm of artificial intelligence, the recent Google AI Cheese Error highlights significant challenges that arise when AI systems like Google Gemini generate content. This controversy stems from a misleading statistic presented in a promotional Super Bowl advertisement, which inaccurately claimed Gouda was the leading cheese globally, sparking debates about the veracity of AI-generated information. As interest in various cheese types, including Gouda, grows, the implications of relying on AI writing assistants become increasingly apparent. The need for accurate data in marketing campaigns cannot be overstated, especially when leveraging AI tools for consumer engagement. This incident serves as a cautionary tale about the importance of fact-checking even the most advanced AI outputs before they reach the public eye.

Understanding Google’s AI Writing Assistant

Google’s AI writing assistant, powered by the Gemini model, has garnered attention for its ability to generate text that appears authoritative. However, this facade can be misleading, as it lacks the ability to cite sources similar to Google Search. Users often assume that the information provided by the AI is accurate, which can lead to significant misunderstandings, particularly when it comes to facts about popular subjects like cheese.

In the case of Gouda, the assistant’s assertion that it accounts for 50 to 60 percent of global cheese consumption has been shown to be an exaggerated claim. The importance of cross-referencing information provided by AI tools cannot be overstated, as relying solely on these outputs can result in the spread of misinformation. This incident highlights the critical need for users to approach AI-generated content with caution.

The Cheese Popularity Controversy

When discussing cheese popularity, Gouda is often touted as one of the favorites, yet it is essential to examine the data critically. The claim that Gouda leads global cheese consumption is not supported by multiple reputable sources. For instance, cheddar and mozzarella have consistently been identified as the top contenders in cheese popularity rankings. The misrepresentation of Gouda’s standing raises questions about the reliability of the AI-generated information.

As cheese enthusiasts and consumers increasingly rely on digital platforms for information, the potential for misinformation can have real consequences. Whether it’s for culinary choices or business decisions, the inaccuracies propagated by AI systems like Google’s Gemini can create a skewed perception of cheese popularity. Thus, consumers should remain vigilant and verify facts before embracing any AI-generated statements.

The Impact of Super Bowl Ads on AI Perception

The Super Bowl is a prime platform for companies to showcase their products, and Google’s use of this event to promote its AI writing assistant further complicates public perception. With millions tuning in, the portrayal of the AI as a reliable source can lead to widespread acceptance of its capabilities, regardless of its factual flaws. The integration of potentially misleading information in these ads can perpetuate misunderstanding about the reliability of AI-generated content.

Furthermore, the failure to acknowledge the experimental nature of AI tools in such high-profile advertisements is concerning. Viewers may not fully grasp the implications of using AI for factual information, especially when they are presented with polished and persuasive marketing tactics. This raises the question of corporate responsibility in ensuring that consumers are appropriately informed about the limitations of AI tools.

Google Gemini’s ‘Cheese Error’ and its Consequences

The incident involving Google Gemini’s cheese error serves as a cautionary tale regarding the reliance on AI systems for factual information. The erroneous claim about Gouda’s global consumption was removed from the Super Bowl ad, but the damage had already been done. Such mistakes can undermine trust in AI technologies, particularly when they are marketed as authoritative tools for information retrieval.

Moreover, the quick removal of the misleading statistic without public acknowledgment raises ethical questions about transparency. Users deserve to understand when information is corrected or retracted, especially when it pertains to widely viewed content like Super Bowl ads. This highlights the need for AI developers to implement robust feedback mechanisms and maintain accountability for the information their systems generate.

The Role of LSI in AI Content Generation

Latent Semantic Indexing (LSI) plays a pivotal role in optimizing AI-generated content. By understanding the relationships between words and concepts, AI systems can produce more contextually relevant and semantically coherent text. However, when LSI is not effectively applied, as was the case with the erroneous Gouda fact, the output can mislead users and propagate inaccuracies.

Thus, integrating LSI more effectively into AI algorithms could enhance the quality of information generated by systems like Google’s Gemini. Ensuring that AI tools not only generate coherent content but also adhere to factual accuracy is vital for building user trust and credibility in AI technologies.

Challenges of AI in Accurate Information Dissemination

The challenges surrounding AI in disseminating accurate information are multifaceted. As AI writing assistants become more prevalent, users often face the dilemma of distinguishing between reliable and fabricated content. This challenge is exacerbated by the lack of source citations, which can leave users unsure of where to validate the information they receive.

The incident with the Gouda statistic underscores the necessity for better transparency and verification systems within AI models. Without adequate checks and balances, AI can inadvertently become a source of misinformation, leading to widespread misconceptions, particularly in popular topics like cheese and culinary trends.

The Importance of Fact-Checking AI Outputs

Fact-checking is more critical than ever in the age of AI-generated content. As demonstrated by the Gouda misinformation, users must take the initiative to verify the information they encounter. The absence of reliable citations from AI writing assistants means that users cannot take the content at face value. Instead, they should cross-reference AI outputs with reputable sources to ensure accuracy.

In an environment where misinformation can spread rapidly, fact-checking serves as a necessary safeguard. It empowers users to make informed decisions based on accurate information, rather than accepting potentially flawed AI-generated content as truth. As AI technologies continue to evolve, the responsibility for verifying information will increasingly fall on the end-users.

AI’s Role in the Future of Food Marketing

The future of food marketing is being reshaped by AI technologies like Google’s Gemini. However, as companies leverage AI to create compelling narratives around products, the risk of misinformation becomes a pressing concern. Marketers must strike a balance between engaging storytelling and factual integrity, especially when promoting popular items like cheese.

As AI writing assistants become integral to marketing strategies, the responsibility to provide accurate information falls on both developers and marketers. The incident involving the Gouda claim in a Super Bowl ad serves as a reminder that while AI can enhance marketing efforts, it must be used thoughtfully to avoid misleading consumers.

Navigating the Intersection of AI and Consumer Trust

Navigating the intersection of AI technologies and consumer trust is crucial in today’s digital landscape. With instances like the Gouda misinformation, consumers must develop a critical approach to the information presented by AI. Trust in AI systems can only be cultivated through transparency, accountability, and a commitment to accuracy.

As users become more aware of the limitations of AI-generated content, they will likely demand higher standards from developers and marketers alike. This evolution will push companies to prioritize factual accuracy and responsible communication over mere engagement, ensuring that AI serves as a reliable resource rather than a source of confusion.

Frequently Asked Questions

What is the Google AI Cheese Error related to Gouda’s popularity?

The Google AI Cheese Error refers to a misleading statement generated by Google’s AI writing assistant, which inaccurately claimed that Gouda cheese accounts for 50-60% of the world’s cheese consumption. This exaggeration was featured in a Super Bowl ad before Google quietly corrected it.

How did Google Gemini contribute to the cheese error about Gouda?

Google Gemini, the AI model used by Google, generated the incorrect statistic about Gouda’s popularity based on unreliable sources. This error highlights the risks of relying on AI-generated content without proper citations.

What steps did Google take to rectify the AI-generated cheese error?

Google removed the incorrect Gouda statistic from the Super Bowl ad and subsequent versions of the content. The updated ad now simply describes Gouda as ‘one of the most popular cheeses in the world’ without providing specific percentages.

Why is the cheese fact check regarding Gouda important in AI-generated content?

The cheese fact check is crucial as it underscores the necessity for accuracy in AI-generated information. It emphasizes that users should verify AI-generated facts, especially when using tools like Google’s AI writing assistant, which may not always provide reliable sources.

What should users know about the reliability of Google’s AI writing assistant?

Users should be aware that Google’s AI writing assistant, while promoted as a helpful tool, is still in an experimental phase and may produce inaccurate or misleading information. It’s essential to fact-check the results it provides.

Was the Gouda information from Google’s AI writing assistant a hallucination?

No, Google clarified that the incorrect Gouda information was not a hallucination but rather a misrepresentation based on data from unreliable web sources. This incident highlights the importance of source verification.

How does the Google AI Cheese Error affect cheese-related businesses?

The Google AI Cheese Error could mislead consumers and businesses alike, potentially skewing perceptions of Gouda’s popularity and impacting marketing strategies. Accurate information is vital for maintaining trust in AI tools.

What implications does the Google AI Cheese Error have for future AI content generation?

The implications of the Google AI Cheese Error are significant, as they highlight the need for AI developers to improve the accuracy and reliability of information generated by AI models like Google Gemini, ensuring that users can trust the content.

| Key Point | Details |

|---|---|

| Google AI Cheese Error | Google’s AI, specifically the Gemini model, produced an inaccurate statistic about Gouda cheese in a Super Bowl ad. |

| Erroneous Statistic | The AI claimed Gouda accounted for 50-60% of the world’s cheese consumption, a statement deemed exaggerated. |

| Correction of Error | The incorrect information was removed from the ad after being flagged and is not present in the updated version. |

| Source Issues | The AI’s figure lacks credible sourcing, leading to concerns over the reliability of AI-generated content. |

| Google’s Response | Google stated that the information wasn’t a ‘hallucination’ but was derived from multiple web sources. |

Summary

The Google AI Cheese Error highlights the challenges surrounding the reliability of AI-generated content. In a recent Super Bowl advertisement, Google’s AI assistant, Gemini, inaccurately reported that Gouda cheese constituted 50-60% of global cheese consumption. This error was later corrected, but it raised significant concerns regarding the accuracy of information generated by AI systems. Users should be cautious and remember that AI outputs, especially from Google AI, may not always provide verifiable facts, as demonstrated by this incident.