DeepSeek AI chatbot has recently made waves in the tech world, emerging as a significant contender in the realm of artificial intelligence. This open-source AI chatbot, developed by a lesser-known Chinese company, boasts simulated reasoning capabilities that rival those of industry leader OpenAI. However, concerns regarding privacy issues and data security have surfaced, particularly due to unencrypted data transmission that could expose user information. Experts have raised alarms about encryption concerns, especially considering that the app transmits sensitive data to servers controlled by ByteDance, the parent company of TikTok. As organizations weigh the benefits of using DeepSeek AI chatbot against these risks, the implications for user privacy and data integrity remain critical discussion points in the AI community.

The recent introduction of the DeepSeek AI chatbot has sparked a vibrant dialogue about the safety and efficacy of open-source artificial intelligence tools. This innovative assistant not only exhibits advanced reasoning abilities but has also raised significant alarms about potential privacy vulnerabilities. With a notable lack of data security measures and a troubling connection to ByteDance, concerns about encryption flaws and data sharing have come to the forefront. As users and organizations evaluate the implications of adopting such technology, it becomes increasingly important to scrutinize the balance between innovation and the safeguarding of personal information. The conversation surrounding this chatbot is not merely about its capabilities but also encapsulates broader issues related to data privacy and security in the digital age.

Understanding Privacy Issues with DeepSeek AI Chatbot

The release of the DeepSeek AI chatbot has raised significant privacy concerns among users and experts alike. One of the most pressing issues is its method of data transmission, which has been reported to occur over unencrypted channels. This means that sensitive information, including user credentials and personal data, is vulnerable to interception by malicious actors. With the lack of encryption, not only is user data at risk, but it also poses a substantial threat to organizational security, as attackers could potentially gain access to proprietary information and user identities.

Moreover, the implications of data security are further compounded by the fact that the app sends data to servers controlled by ByteDance, a Chinese company. This raises alarms about data sovereignty and the potential for government surveillance. Users must be aware that their data may be subject to foreign jurisdiction, making it crucial to consider the long-term ramifications of using such applications. As privacy issues continue to unfold, it becomes imperative for users to evaluate the extent to which they trust the security measures implemented by DeepSeek.

Data Security Risks Associated with DeepSeek

The DeepSeek AI chatbot’s data security protocols have come under scrutiny, particularly concerning the use of outdated encryption methods. The app employs a symmetric encryption scheme known as 3DES, which has been deprecated due to vulnerabilities that can be exploited by attackers. The hardcoding of encryption keys into the app is another critical failure, as it makes it easier for malicious entities to access sensitive data. Security experts have long criticized these practices, highlighting that they do not meet current standards for secure data transmission.

In addition to the encryption concerns, there are reports of data being shared with third parties, including ByteDance, which raises further questions about user privacy and data handling. The potential for cross-referencing user data collected from various sources adds another layer of risk, particularly for organizations that prioritize data confidentiality. As users navigate the landscape of AI applications, understanding these data security risks is essential for making informed decisions about app usage.

Encryption Concerns with DeepSeek AI Chatbot

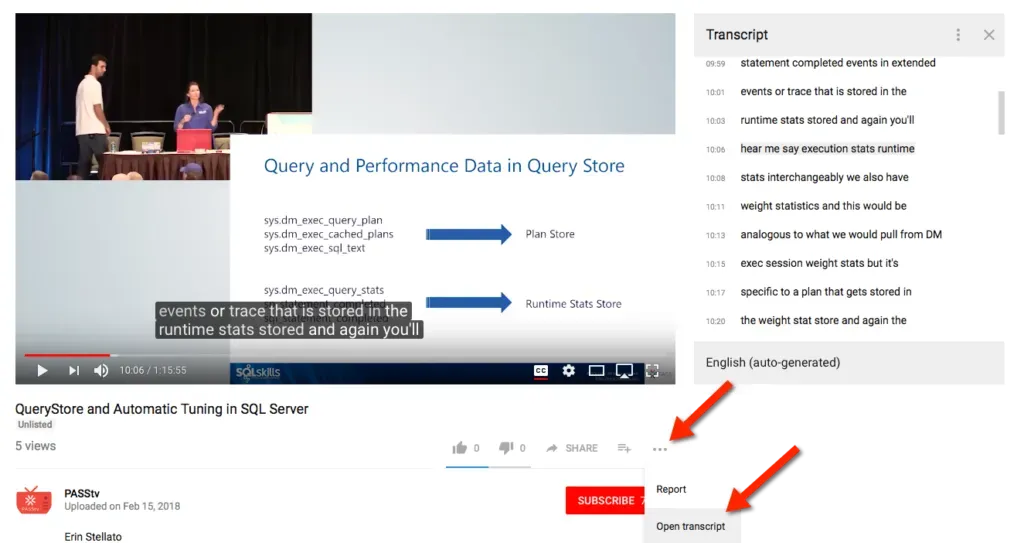

Encryption is a cornerstone of data security, and the lack of robust encryption in the DeepSeek AI chatbot is a matter of serious concern. The app’s decision to disable App Transport Security (ATS) globally means that data is transmitted in clear text, making it susceptible to interception. This not only compromises user privacy but also undermines trust in the application and its developers. Without effective encryption, the very purpose of using an AI chatbot for sensitive tasks becomes questionable.

Furthermore, the reliance on deprecated encryption standards such as 3DES reflects a lack of commitment to user security from the developers. As cyber threats continue to evolve, it is essential for AI applications to adopt modern encryption methods that can safeguard user data against potential breaches. The current encryption concerns surrounding the DeepSeek AI chatbot serve as a reminder of the importance of prioritizing security in the development of AI technologies.

The Role of Open-Source AI in Data Privacy

The emergence of open-source AI technologies like the DeepSeek AI chatbot presents unique challenges and opportunities for data privacy. While open-source platforms can foster innovation and collaboration, they also raise questions about who is responsible for ensuring user data is protected. The fact that DeepSeek’s app is open-source means that anyone can inspect its code; however, this transparency does not guarantee that the app adheres to best practices in data security and privacy. Developers must prioritize user protection, especially when handling sensitive information.

Moreover, the community surrounding open-source projects plays a vital role in identifying and addressing potential security flaws. It is crucial for developers to engage with security experts and users to understand the implications of their technologies on privacy. As the landscape of open-source AI continues to evolve, fostering a culture of security awareness and accountability will be essential for protecting user data and maintaining trust in AI solutions.

ByteDance Data Sharing and Its Implications

The connection between the DeepSeek AI chatbot and ByteDance raises significant concerns about data sharing practices. As a major player in the technology space, ByteDance has access to vast amounts of user data, which can be leveraged for various purposes, including targeted advertising and user profiling. The potential for user data collected through DeepSeek to be cross-referenced with other data sources controlled by ByteDance poses a risk to individual privacy and data integrity. Users must be aware of these implications when engaging with the app.

Additionally, the transparency of data handling practices is crucial in building user trust. The privacy policy of DeepSeek indicates that user data may be shared with law enforcement and other third parties, which could lead to unintended consequences for users. As organizations and individuals increasingly rely on AI applications, understanding data sharing practices becomes essential in navigating the complex landscape of privacy and security.

Navigating National Security Concerns with DeepSeek

The national security implications of using the DeepSeek AI chatbot cannot be overlooked, particularly given its ties to ByteDance and Chinese ownership. As governments grapple with the potential for foreign interference and data surveillance, concerns about the app’s security have prompted lawmakers to push for its ban on government devices. The fear is that the Chinese Communist Party could exploit vulnerabilities within the app to access sensitive data from American users. Such national security issues highlight the broader implications of using foreign-developed AI technologies.

In light of these concerns, organizations must critically assess their reliance on applications like DeepSeek. Implementing robust security protocols and ensuring compliance with national security regulations are vital steps organizations can take to protect their data. As the debate over the app continues, it is essential for users to remain informed about the evolving landscape of AI and its implications for privacy and security.

The Importance of User Awareness in AI Applications

User awareness is paramount when it comes to engaging with AI applications, especially those like the DeepSeek chatbot that have raised red flags regarding security and privacy. Users must educate themselves about the risks associated with using such applications, including data transmission vulnerabilities and the potential for data misuse. Understanding the implications of using an AI chatbot that lacks proper encryption and has ties to foreign entities is crucial for protecting personal and organizational data.

Furthermore, users should actively seek out information regarding an application’s privacy policies and security practices. By being informed, users can make better decisions about whether to engage with certain applications. As the AI landscape continues to grow, fostering a culture of awareness and vigilance will be essential in ensuring that users are equipped to navigate the complexities of data security and privacy.

Recommendations for Organizations Using DeepSeek

Given the concerning findings surrounding the DeepSeek AI chatbot, organizations are advised to take immediate action to safeguard their data and privacy. First and foremost, it is recommended that organizations remove the DeepSeek app from their environments, as its insecure data transmission poses significant risks. Implementing alternative AI solutions that prioritize security and are compliant with industry standards is essential for maintaining data integrity.

Additionally, organizations should conduct thorough audits of their current applications to identify potential vulnerabilities. Training employees to recognize security threats and encouraging best practices when it comes to data handling can further enhance organizational security. As the landscape of AI technology continues to evolve, proactive measures will be key to protecting sensitive information from potential breaches.

Future of AI Chatbots and Security Standards

The future of AI chatbots, particularly those similar to DeepSeek, will heavily depend on the industry’s ability to address security concerns and implement robust standards. As users increasingly adopt AI technologies for various applications, the demand for secure and trustworthy solutions will grow. Developers must prioritize the integration of advanced encryption methods and adhere to best practices in data security to build user confidence and foster adoption.

Moreover, the establishment of industry-wide security standards for AI applications will be crucial in ensuring that all developers prioritize user safety. Collaborations between tech companies, security experts, and regulatory bodies can lead to the creation of a framework that addresses the unique challenges posed by AI technologies. By focusing on security and privacy, the future of AI chatbots can be shaped toward creating safer digital environments for all users.

Frequently Asked Questions

What are the privacy issues associated with the DeepSeek AI chatbot?

The DeepSeek AI chatbot has raised significant privacy concerns primarily due to its insecure data transmission practices. Reports indicate that sensitive data is sent over unencrypted channels, making it vulnerable to interception and tampering. Additionally, user data is sent to servers controlled by ByteDance, raising concerns about potential unauthorized access and tracking.

How does DeepSeek AI chatbot handle data security?

Data security for the DeepSeek AI chatbot is currently inadequate. The app disables App Transport Security (ATS), which is recommended by Apple to encrypt data in transit. This compromises the security of user information, as sensitive data can be transmitted in clear text. Furthermore, the use of deprecated encryption methods, such as 3DES, poses additional risks.

Are there any encryption concerns with the DeepSeek AI chatbot?

Yes, there are significant encryption concerns with the DeepSeek AI chatbot. The app employs a symmetric encryption scheme (3DES) that has been deemed insecure, and the symmetric keys are hardcoded into the app, which presents a major security flaw. This means that the same key is used for all users, making the encryption easily breakable.

Is DeepSeek AI chatbot an open-source AI solution?

Yes, the DeepSeek AI chatbot is an open-source AI solution. It utilizes an open weights simulated reasoning model, which allows developers and researchers to access and analyze its underlying architecture. However, despite being open-source, the app’s privacy and security issues raise concerns about its safe implementation.

What is the relationship between DeepSeek AI chatbot and ByteDance data sharing?

The DeepSeek AI chatbot shares data with ByteDance, the parent company of TikTok. While some data is encrypted during transmission, it is decrypted on ByteDance-controlled servers, where it can be cross-referenced with other user data. This raises significant concerns about privacy and potential tracking of user behavior.

What are the recommended actions for organizations regarding the DeepSeek AI chatbot?

Organizations are advised to remove the DeepSeek AI chatbot from their devices due to privacy and security risks. The app’s insecure data transmission, hardcoded encryption keys, and data sharing practices with ByteDance pose significant threats to data security and user privacy.

How has the DeepSeek AI chatbot’s launch impacted the AI landscape?

The launch of the DeepSeek AI chatbot has disrupted the AI landscape by quickly rising to the top of the iPhone App Store, surpassing established competitors like ChatGPT. Its simulated reasoning capabilities have drawn attention, but the accompanying security and privacy issues have raised alarms among experts and users alike.

What should users be aware of before using the DeepSeek AI chatbot?

Before using the DeepSeek AI chatbot, users should be aware of its significant privacy issues, including insecure data transmission and potential data sharing with ByteDance. Users must consider the risks to their personal information and the app’s lack of basic security protections.

Why is the DeepSeek AI chatbot’s use of 3DES encryption concerning?

The use of 3DES encryption by the DeepSeek AI chatbot is concerning because this encryption method has been deprecated due to vulnerabilities that make it susceptible to practical attacks. Such weaknesses could enable unauthorized parties to decrypt sensitive information transmitted via the app.

What are the potential national security implications of the DeepSeek AI chatbot?

The DeepSeek AI chatbot poses potential national security implications, especially regarding the Chinese Communist Party’s access to user data. U.S. lawmakers are considering banning the app from government devices due to fears that it may contain backdoors for data access, which could compromise sensitive information.

| Key Point | Details |

|---|---|

| DeepSeek AI Chatbot Release | DeepSeek released an open-source AI chatbot with reasoning capabilities similar to OpenAI’s. |

| Rapid Popularity | The app quickly became the top free app on the iPhone App Store, surpassing ChatGPT. |

| Security Concerns | NowSecure reported the app transmits sensitive data over unencrypted channels. |

| Encryption Issues | The app uses hardcoded symmetric keys and deprecated 3DES encryption. |

| Data Storage and Sharing | Data is sent to ByteDance’s servers in China, raising privacy concerns. |

| Recommendations from NowSecure | Organizations are advised to remove the app due to privacy and security risks. |

| Government Response | US lawmakers are advocating for a ban on DeepSeek for government devices. |

Summary

DeepSeek AI chatbot has emerged as a significant player in the AI landscape, showcasing impressive reasoning capabilities. However, the app has been flagged for serious security vulnerabilities, including unencrypted data transmission and problematic encryption practices. This raises significant privacy concerns, especially with data being stored on servers in China. As organizations weigh the risks, the recommendation to remove DeepSeek from environments highlights the urgency of addressing these issues. The discussions in the US government regarding a potential ban further underscore the implications of data security and national interest surrounding the DeepSeek AI chatbot.